Optimizing ETL Workflows Using Databricks And Delta Lake

By Deepika Geetla, HEXstream data engineer

In the world of big data, efficient ETL (extract, transform, load) workflows are the backbone of data-engineering operations. As businesses generate massive amounts of data, optimizing these workflows for scalability, reliability and speed is crucial.

A powerful way to achieve these goals is by leveraging Databricks with Delta Lake, which together provide a robust solution for modern ETL pipelines.

In this article, we will explore how Databricks and Delta Lake work together to enhance ETL processes, ensuring high performance, data consistency and scalability.

What is Databricks

Databricks is a unified data-analytics platform built on Apache Spark, designed for efficiently handling large-scale data workloads. It supports both batch and real-time data pipelines, making it a preferred choice for data engineering, machine learning and analytics.

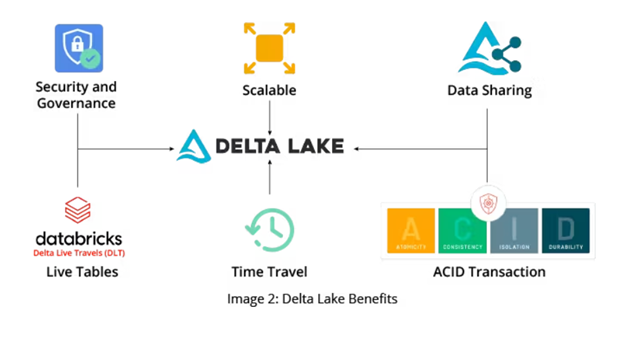

What is Delta Lake

Delta Lake is an open-source storage layer that enhances data lakes with ACID transactions, schema enforcement and versioning. It is built on Apache Parquet, making it highly optimized for analytical workloads. Delta Lake ensures that ETL workflows maintain data integrity, reliability and performance at scale.

Why use Databricks and Delta Lake together?

While Databricks provides high-speed data processing, Delta Lake improves data quality, consistency and management. By integrating the two, organizations can:

- Ensure data reliability with ACID transactions

- Optimize performance through indexing, caching and optimized storage

- Enable incremental processing instead of full reprocessing

- Support time travel for rollback and historical analysis

- Manage both batch and streaming data seamlessly

Advantages of using Databricks with Delta Lake

1. Ensuring data quality and consistency

- ACID transactions: Ensures atomicity, consistency, isolation and durability, preventing data corruption

- Schema enforcement: Automatically prevents unexpected changes in data structure

- Data validation: Guarantees that only clean, structured data enters the pipeline

2. Boosting performance and reducing costs

Traditional data lakes often suffer from fragmentation, leading to slow queries and high costs. Delta Lake solves this with:

- Compaction of small files: Organizes data into efficient storage formats, reducing latency

- Indexing for faster queries: Uses techniques like ZORDER clustering to speed up searches

- Caching and data skipping: Reduces the need for scanning entire datasets

3. Enabling incremental data processing

Instead of reloading entire datasets, Delta Lake supports:

- Merge operations: Updates only changed records, improving efficiency

- Change data capture (CDC): Detects and processes only new or modified records

4. Supporting Time Travel and data recovery

One of Delta Lake’s standout features is Time Travel, which enables users to access previous data versions.

- Rollback mistakes: Instantly revert to an earlier version if errors occur

- Data auditing: Keep track of historical changes for compliance and governance

Tactics for optimizing ETL workflows with Databricks and Delta Lake

Data ingestion: Load data from multiple sources (cloud storage, databases, APIs) into Delta Lake tables. Use auto-loader in Databricks for efficient streaming data ingestion.

Data transformation: Perform cleansing, deduplication, and aggregations using Spark SQL and Delta Lake. Optimize transformations with Partitioning and ZORDER indexing.

Data loading: Store transformed data into Delta format for efficient querying. Use Databricks SQL for reporting and analytics.

Scheduling and orchestration: Automate ETL workflows using Databricks Workflows or Apache Airflow.

Monitoring and error handling: Use Delta Lake transaction logs to track changes and debug failures. Implement alerting mechanisms for failed jobs.

Best practices for optimizing Databricks and Delta Lake ETL workflows

- Partition data efficiently: Helps in parallel processing and improves performance

- Use delta caching: Reduces query execution time by storing frequently accessed data in memory

- Leverage auto-scaling: Dynamically adjust cluster size based on workload to optimize costs

- Optimize storage format: Store data in Parquet or Delta format for better compression and performance

- Enable data lineage tracking: Maintain audit logs for better governance and compliance

Conclusion

By combining Databricks for data transformations with Delta Lake’s storage and reliability features, organizations can build highly efficient and scalable ETL workflows. This integration enables automated data processing, ensures data quality and reduces costs.

Whether dealing with batch or streaming data, using Databricks and Delta Lake together is a significant evolution for modern data engineering.

CLICK HERE TO CONNECT WITH US TO DISCUSS OPTIMIZING YOUR ETL WORKFLOWS.