Configure Multiple Data Sources to Denodo’s Data Virtualization Platform

In our last article, we offered an overview of Data Virtualization and shared many of the benefits it yields for organizations seeking to optimize their data operations. In this follow up, we will demonstrate how Denodo connects to a variety of data sources and what it requires in order to do so. We will then show how multiple data sources may be integrated using Denodo and then made visual for analytics through the use of a data visualization tool like Tableau.

Though Denodo is capable of connecting to many more data sources, and certainly at the same time, today we will focus on the following to demonstrate Denodo’s versatility.

- Spark-SQL and Hive

- Oracle

- NoSQL Database (Mongo DB and HBase)

- XML Files

- Azure Blob Storage

Spark-SQL and Hive as a Data Source

Below are the prerequisites to connect Spark-SQL and Hive using Denodo Platform.

- Denodo can retrieve data from Spark-SQL (a Spark Module running on Apache Hadoop YARN on Hortonworks ecosystem)

- Spark-SQL supports reading and writing data stored in Apache Hive (if Hive dependencies can be found on the class path, Spark will load them automatically)

- Note that the metastore.warehouse.dirproperty in hive-site.xml is deprecated since Spark 2.0.0. Instead, use spark.sql.warehouse.dir to specify the default location of database in warehouse.

Below are the steps to configure Hive and Spark-SQL as a data source to enable Data Virtualization with Denodo

Navigate to Files-> Data Source -> JDBC

Once JDBC data source is selected, we need to configure the data source to connect to the hive server (either on-premise or on the server its hosted).

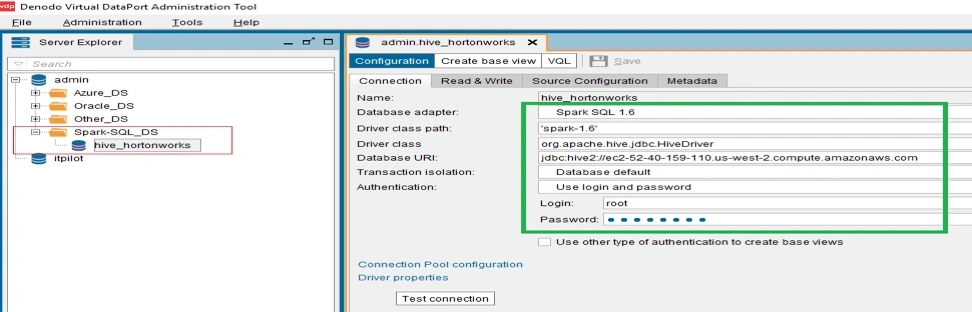

In the Configuration tab, the following parameters need to be filled:

- Name -> Hive Server (you can choose any relevant name)

- Data Adapter -> Spark SQL 1.6 (Select from the drop box)

- Driver Class path -> ‘spark-1.6’

- Driver class -> org.apache.hive.jdbc.HiveDriver

- Database URL -> jdbc:hive2://ecx-xx-xx-xxx-xxx.us-xxxxx-2.compute.amazonaws.com (public IP)

- Transaction Isolation: Default database

- Authentication-> fill in the login password of the Hive server

- Once these steps are performed, click on test the connection.

- If the test connection is successful, we can access the Hive and Spark-SQL database.

The above screenshot shows the configuration parameters for Spark-SQL and Hive.

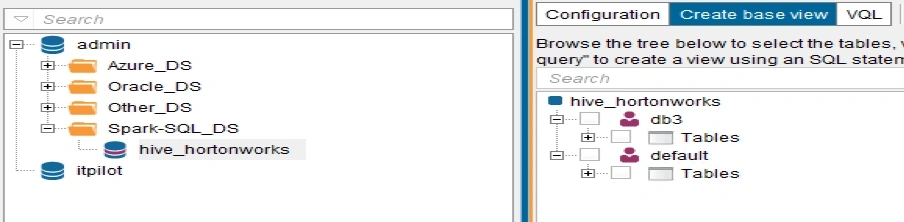

The above screenshot shows the available database under Hive server which can be accessed.

Oracle as a Data Source

Denodo can retrieve data from RDBMS sources such as Oracle My-SQL. Below are the steps to configure Oracle as a data source for Denodo Data Virtualization.

Below are the steps to configure Oracle as a data source to enable Data Virtualization with Denodo

Navigate to Files-> Data Source -> JDBC

Once the JDBC data source is selected, we need to configure the data source to connect to the Oracle Database server (either on-premise or on the server where it is hosted).

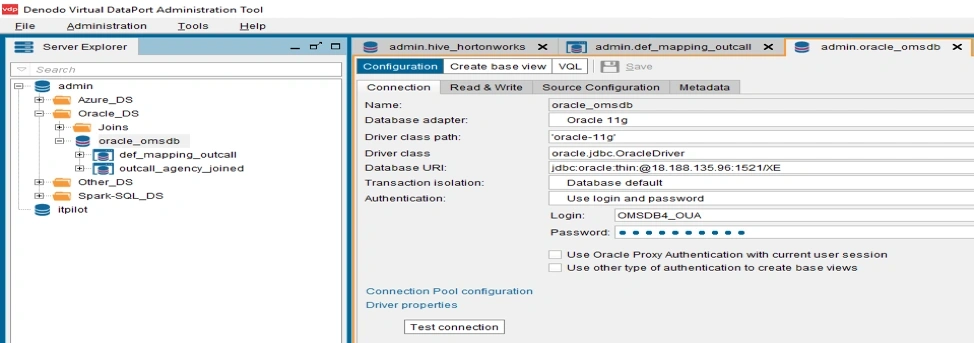

In the Configuration tab, the following parameters need to be filled:

- Name -> Oracle Server (you can choose any relevant name)

- Data Adapter -> Oracle 11g (Select from the drop box)

- Driver Class path -> ‘oracle-11g’

- Driver class -> oracle.jdbc.OracleDriver

- Database URL -> jdbc:oracle:thin:@xx.xxx.xxx.xx:1521/XE (public IP)

- Transaction Isolation: Default database

- Authentication-> fill in the login password for Oracle server and validate it

- Once these steps are performed, click to test the connection.

- If the test connection is successful, we can access the tables in the database.

The above screenshot shows the configuration parameters for Oracle Databases as a data source.

NoSQL Database as a Data Source

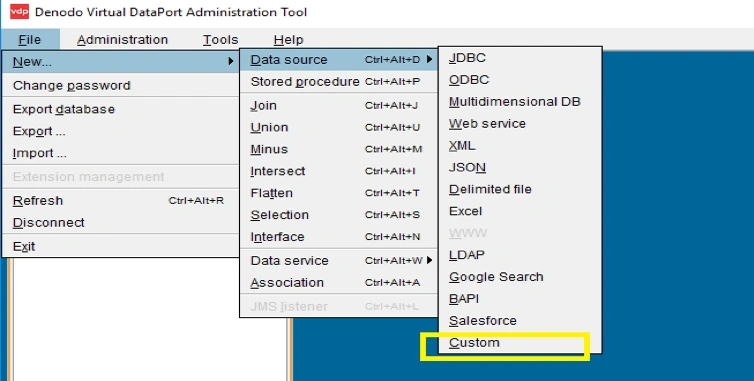

To connect Denodo to HBase or Mongo DB, we need the the corresponding HBase Custom Wrapper or Mongo DB Custom Wrapper.

Below are the steps to configure a NoSQL database as a data source to enable Data Virtualization with Denodo

Navigate to Files-> Data Source -> Custom

Once Custom data source is selected, we need to configure the data source to connect to the Mongo DB server (either on-premise or on the server where it is hosted).

In the Configuration tab, the following parameters need to be filled:

- Name -> MongoDB Server (you can choose any relevant name)

- Class Name -> com.denodo.connect.mongodb.wrapper.MongoDBWrapper

- Select Jars -> Denodo-mongodb-customwrapper-7.0 (Mongo DB) and Denodo-hdfs-customwrapper-6.0 (for HBase).

- Authentication-> fill in the login password for Oracle server and validate it.

- Once these steps are performed, click to test the connection.

- If the test connection is successful, we can access the tables in the database.

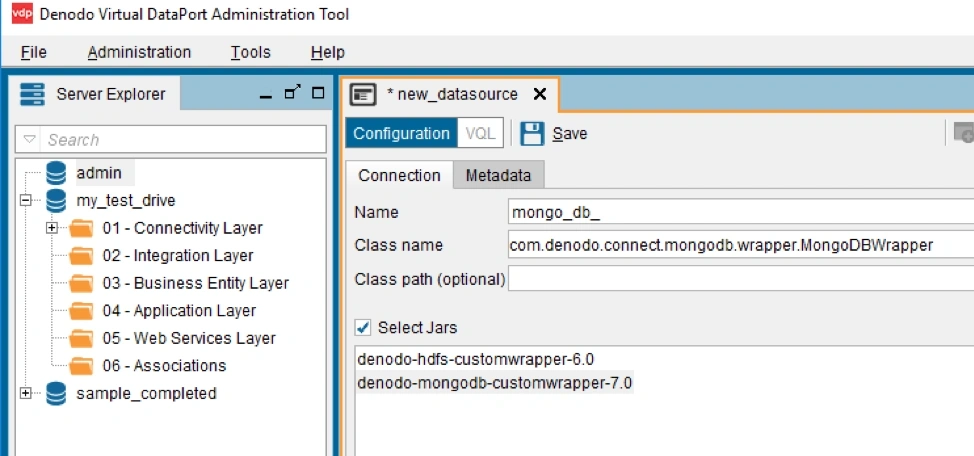

The above screenshot shows how to select the data source for Mondo DB on Denodo Tool.

The above screenshot shows how to configure Mondo DB for Denodo with the specific wrapper.

XML File as a Data Source

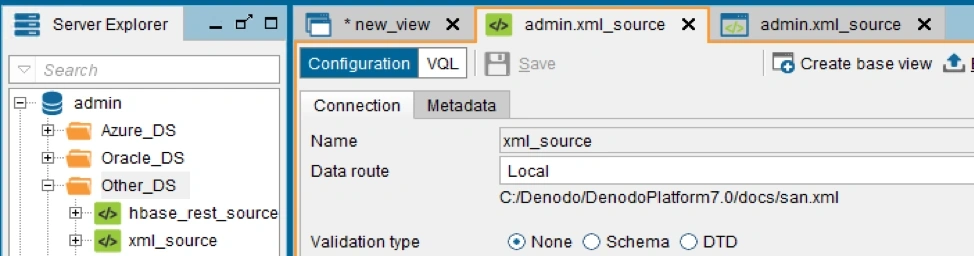

To create an XML data source to access an XML document, right-click on the Server.

Below are the steps to configure an XML file as a data source to enable Data Virtualization with Denodo

In the Virtual Data Port Administration Tool navigate to: New > Data source > XML.

The following data are requested in this dialog:

- Name: Name of the new data source.

- Data Route: Path to the XML file that contains the required data.

- Ignore route errors: If selected, the Server will ignore the errors occurred when accessing the file(s) to which the data source points. This option is not meant to be used when the data source reads a single file. Its main purpose is when the data source points to a collection of files and you know some of them may be missing.

- Validation type: If selected, the structure of the input XML file will be obtained from a Schema or a DTD. If “None” is selected, Virtual Data Port will analyze the XML document to infer its schema.

- Validate route: Path to the Schema (XSD) or DTD of the input XML document. If present, instead of obtaining the XML document from the “Data Route” to calculate the schema of the new base view, the Server will use the contents of the XSD or the DTD.

- Validate data: If selected, Virtual Data Port will validate the input XML file every time the data source is accessed.

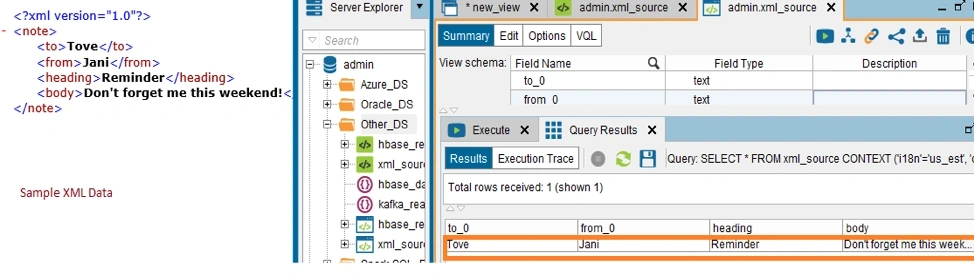

The above screenshot shows how to configure an Xml file as data source.

The above screenshot displays the output as tabular form extracted from XML file as data source.

Azure Blob Storage as a Data Source

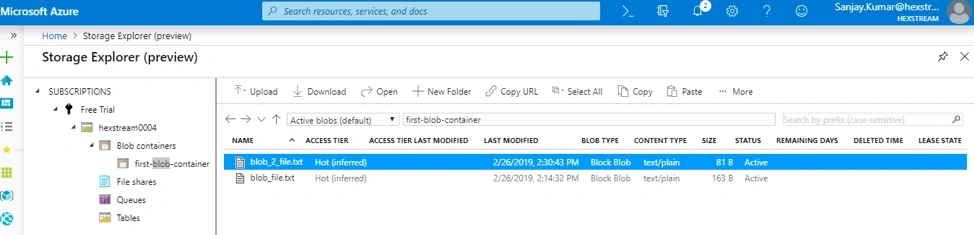

To access to the Microsoft Azure Storage, we first need an Azure subscription where we can create a blob service container. We can upload the block blob under the blob storage container.

Below are the steps to configure Azure Blob Storage as a data source on the Denodo Data Virtualization Platform.

- In the Virtual Data Port Administration Tool, select the type of data source needed depending on the type of file which you want to recover from Azure Blob storage by Navigating to New > Data Source in the contextual menu

- In this example, a “Delimited File” data source will be used.

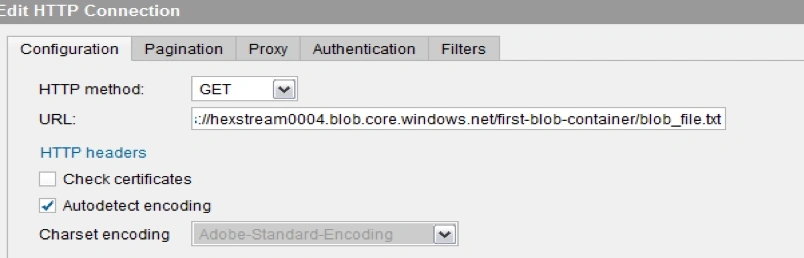

- Select the “HTTP Client” option as the “Data Route” parameter.

- Configure the “HTTP Client” data route to access the Azure Blob storage by clicking the “Configure” button.

- HTTP Method: GET.

- URL: Specify the URL to retrieve the files from the Azure Blob storage.

- Configure the “HTTP Headers”.

- In the “Authentication” tab, choose the authentication as “OAuth 2.0” in the drop-down list.

- Specify the Client Identifier and Client secret that corresponds with Blob storage.

- Launch the OAuth Credentials Wizard by clicking the link.

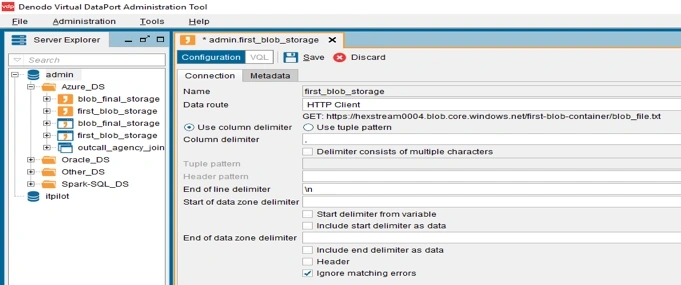

The above screenshot shows how to create an Azure Blob container.

The above screenshots show how to configure Denodo to Azure Blob Storage. Make sure the data source is Delimited.

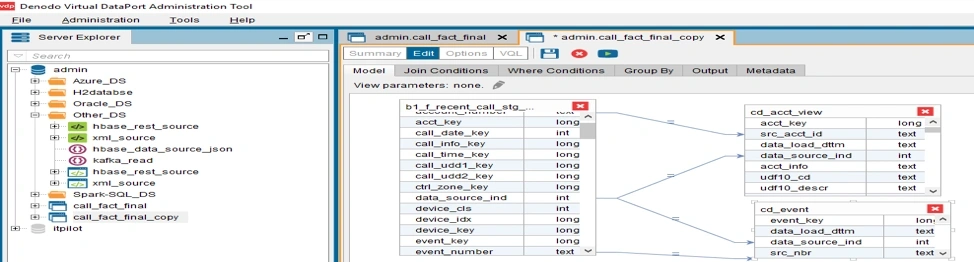

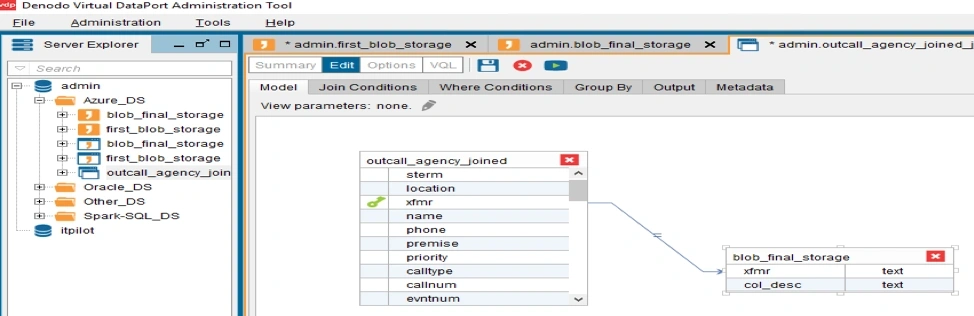

Integrating Data Sources Using Denodo Virtualization Tool

Denodo leverages the functionality of transformations (join, union, etc.) on Tables extracted from RDMBS data source.

Below are the steps to perform Join

- In the Virtual Data Port Administration Tool, Navigate to Files-> New -> join

- Select the source tables and drag it on the console. We can perform joins based on where, group by etc. conditions, we can filter the data, change the data type, and more.

The above screenshots show the joining of tables from different data sources (i.e. Oracle and Blob Storage).

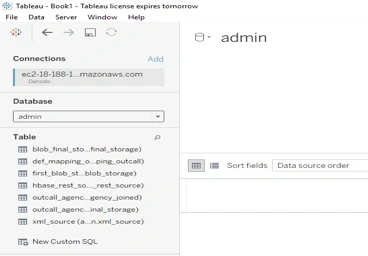

Connecting the virtualization tool to the visualization tool to perform analytics

Below are the steps to make the connection between Denodo and Tableau and set up the data source.

- Start Tableau. Under Connect, select Denodo. For a complete list of data connections, select More under To a Server. Then do the following:

- Enter the name of the server that hosts the database.

- Enter the name of the database.

- Select how you want to sign in to the server. Specify whether to use Integrated Authentication or Username and Password. If the server is password protected, and you are not in a Kerberos environment, you must enter the user name and password.

Note: If you’re using a Mac, and it is not attached to the domain correctly, the Mac won’t know that Kerberos is being used in the domain, and the Authentication drop-down list won’t be available.

- Select the Require SSL check box when connecting to an SSL server.

- Select Sign In.

- If Tableau can’t make the connection, verify that your credentials are correct. If you still can’t connect, your computer is having trouble locating the server. Contact your network administrator or database administrator.

The above screenshot shows the connection of Tableau with Denodo to perform analytics.

Conclusion

As organizations examine the ways in which they should expand and improve upon their data operations, data virtualization is a valuable tool to enable ease of access and integration across systems. When coupled with data visualization using a tool like Tableau, the value of data virtualization is extended by allowing business users to perform analytics to ultimately drive stronger decision making across their enterprise.

Let's get your data streamlined today!

Other Blogs

Why Utilities Need to Embrace Modern Technologies to Improve Cloud Adoption

Utilities have begun adopting cloud-based capabilities across a cluster of key business functions such as customer experience, asset management, and r

In the race to digital transformation, where should utilities start?

Almost every business leader in the utility space and beyond has heard the buzz about “digital transformation.” And for good reason! The right digital

Intelligent Process Automation is the Future of Utility Operations

There is no doubt that utilities have begun adopting process automation solutions at an accelerating rate. Much commentary on this trend, however, sug